Are Medical Schools Checking If You Use AI?

The tension in the room was palpable. My friend—let’s call him Rahul—was obsessively refreshing his email inbox. He’d just submitted his med school application essay, a culmination of weeks of sweat and sleepless nights. As the loading wheel spun, he muttered under his breath, “What if they think I used AI to write it?”

Rahul isn’t alone. From pre-med students perfecting their personal statements to practicing doctors working on research submissions, the question looms large: Are medical schools checking if you use AI? And if they aren’t already, will med schools use AI checkers in the future?Let’s dive in.

The Stakes Are High If You Use AI

Medical school applications are as much about precision and integrity as they are about storytelling. An applicant’s personal essay is a chance to show admissions committees who they are beyond grades and test scores. But with the explosion of tools like ChatGPT and other generative AI systems, concerns about originality—and fairness—are creeping into the process.

Imagine this: You pour your heart into a personal story about volunteering at a rural clinic, only for admissions officers to wonder if it was penned by AI. It’s no surprise that does med schools check AI is becoming a trending search.

Why? Because the stakes couldn’t be higher. Medical schools are breeding grounds for future surgeons, physicians, and researchers. Integrity matters, not just in their acceptance letters but in their future practice. And the use of AI-generated content in application materials could be seen as cutting corners—or worse, as deceptive.

Why Medical Schools Need to Test AI Usage

Medical schools uphold the highest standards of academic integrity, and for good reason—the stakes couldn’t be higher. The journey from student to physician is built on trust, discipline, and rigorous intellectual development. If AI tools are misused, they risk eroding the foundation of that trust, leaving schools questioning whether candidates truly possess the skills and values necessary for the profession.

1.Protecting Integrity and Trust:

Medicine isn’t just a science; it’s a moral endeavor. Admissions committees want to ensure applicants can think critically, solve complex problems, and communicate authentically—qualities an over-reliance on AI can obscure. A perfect but AI-generated essay might showcase polish, but it lacks the grit and nuance of lived experience.

2.Leveling the Playing Field:

Not all students have equal access to AI tools, creating disparities in the application process. Schools must guard against unfair advantages, ensuring that the evaluation process reflects effort and originality rather than access to advanced technology.

3.Evaluating Core Competencies:

Writing a personal statement isn’t just about telling a story—it’s a demonstration of key skills like clarity, empathy, and analytical thinking. Overuse of AI could mean these essential traits aren’t accurately represented, skewing the selection process.

4.Addressing Ethical Concerns:

Medicine is guided by a strict ethical framework. If applicants cut corners now, it raises concerns about how they’ll approach ethical dilemmas in their future careers.

5.Adapting to a Changing Landscape:

AI is not inherently bad; it’s a tool. The challenge for medical schools is to differentiate between ethical use (e.g., grammar checks, brainstorming) and misuse (e.g., generating entire essays). This distinction ensures applicants are tech-savvy without bypassing the intellectual rigor required for the profession.

In short, medical schools aren’t just testing AI use—they’re testing character, critical thinking, and readiness to meet the demands of one of the most challenging and honorable professions in the world. As AI becomes increasingly integrated into education, this balancing act will only grow more critical.

Pros and Cons of AI Generation

| Aspect | Advantages | Disadvantages |

|---|---|---|

| Efficiency and Speed | AI generates drafts in minutes, saving time for busy individuals juggling multiple responsibilities. | Over-reliance on AI tools can hinder personal skill development in critical thinking and writing. |

| Grammar and Style | Offers real-time grammatical corrections and stylistic improvements, aiding non-native speakers. | Output may lack emotional nuance and personal depth, vital for compelling narratives. |

| Accessibility | Provides an affordable alternative to expensive writing coaches or editors, leveling the playing field. | Excessive dependence might reduce creativity and spontaneity in human expression. |

| Learning Support | Acts as an educational tool, offering feedback to improve sentence structure and vocabulary. | AI-generated content may appear formulaic or predictable, failing to stand out to reviewers. |

| Detection Risks | — | Improved AI detectors increase the chances of submissions being flagged for authenticity issues. |

| Ethical Considerations | — | Using AI excessively may blur ethical boundaries, raising concerns about honesty and originality. |

Who Should Be Tested In Medical Schools for AI Use?

1.Applicants to Medical Schools:

Personal statements and application essays are the most likely targets for AI scrutiny. These submissions are supposed to reflect an applicant’s authentic voice, experiences, and motivations. If AI tools are used excessively—or worse, generate the entire essay—they risk undermining the integrity of the admissions process. Admissions officers rely on these essays to assess not only the applicant’s passion for medicine but also their ability to articulate personal insights. Testing these submissions ensures fairness in a highly competitive process.

2.Medical Researchers and Faculty:

Academic research is another critical area where AI use must be monitored. From writing research papers to generating data analysis, AI tools have immense potential to accelerate progress. However, misuse—such as presenting AI-generated findings as original work—can jeopardize the credibility of research and even have downstream effects on medical practice. Testing academic outputs for AI influence ensures the quality and trustworthiness of published medical research.

3.Medical Students in Training:

For students already in med school, coursework and exams represent opportunities to develop critical thinking and problem-solving skills. Over-reliance on AI tools in assignments could hinder their intellectual growth and ability to handle real-world challenges. Testing for AI use in training ensures that students develop the hands-on expertise and analytical skills necessary for a medical career, where decisions often mean life or death.

4.Healthcare Professionals Writing Certifications or Licenses:

For practicing healthcare workers, certification exams or continuing education requirements could become a target for AI detection. These assessments are designed to test a professional’s knowledge and judgment, not their ability to leverage AI. Testing ensures that healthcare providers remain competent and accountable in their fields.

How Are Med Schools Approaching AI?

So, are medical schools checking if you use AI? The short answer: some are, and more might be soon.

Admissions committees are waking up to the reality of generative AI. The tools themselves are becoming easier to access, cheaper, and harder to detect without specialized software. Some institutions are starting to experiment with AI content detectors to ensure applicants’ essays and research submissions maintain originality.

These tools—like the one Rahul feared—use statistical patterns to flag AI-generated text. While no tool is perfect, they’re getting better. And it’s not just med schools. Academic journals and law schools are also diving headfirst into this AI-detection game.

The Future of Med School AI Checks

Will med schools use AI checkers? The odds are high. But here’s what could happen next:

1.Transparent Policies:

Med schools might begin openly stating their use of AI-detection tools, akin to plagiarism checkers.

2.Guidelines on AI Use:

Instead of banning AI outright, schools might define acceptable use—e.g., using it for grammar correction but not for drafting entire essays.

3.AI Accountability Essays:

Applicants might be asked to explicitly state how they used AI in their application process.

How AI Content Detectors Help

AI content detectors are designed to analyze and identify the characteristics of text that indicate whether it was generated by an AI model or written by a human. These tools use advanced algorithms that examine various linguistic features such as syntax, grammar, coherence, and contextual patterns. The goal is to distinguish between natural human writing and the more structured or formulaic output produced by AI tools.

In contexts like medical schools checking if you use AI, these detectors help ensure that applicants submit genuine, original work. When applicants submit essays, research papers, or personal statements, these documents must reflect their personal experiences and critical thinking. AI content detectors are crucial for spotting discrepancies that suggest a text might be artificially generated.

Medical schools and other academic institutions need reliable methods to determine if applicants are submitting AI-generated content. This is especially important in the competitive world of higher education, where students’ writing skills and originality are assessed rigorously. By identifying AI-generated work, these detectors help maintain academic integrity and ensure a fair admissions process.

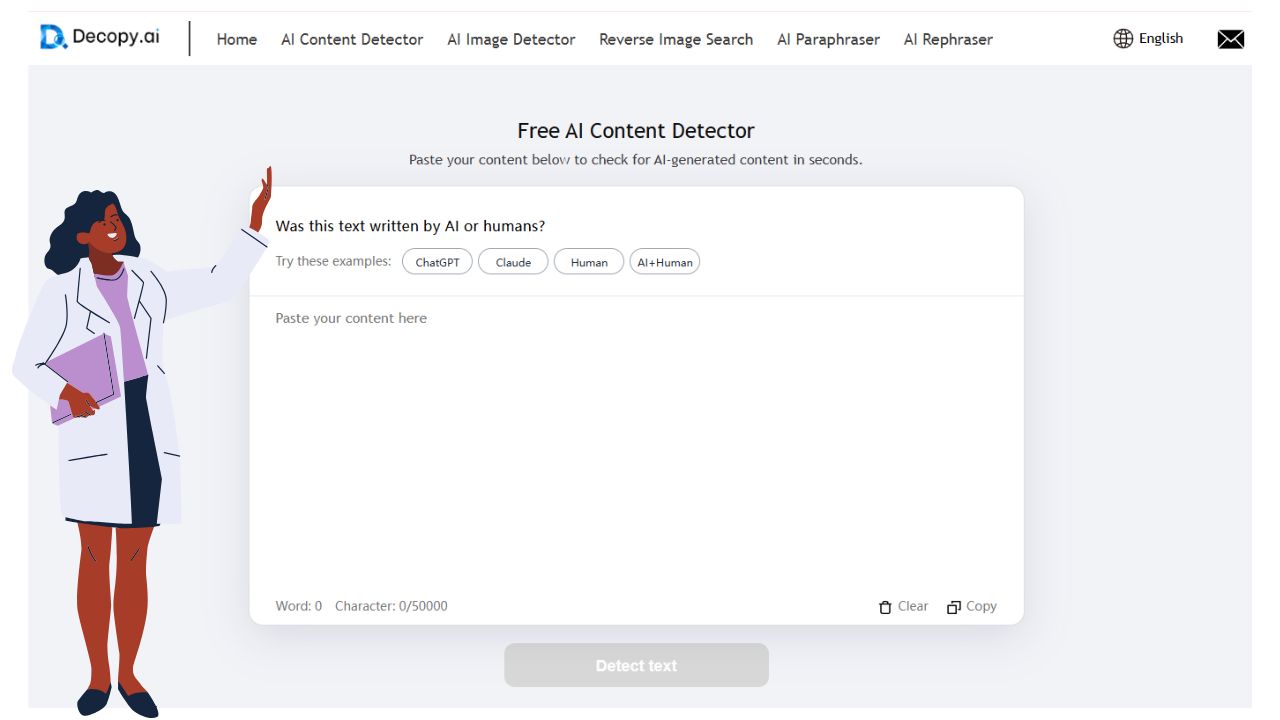

Introducing Decopy AI Content Detector

As AI-generated content becomes more prevalent, the demand for accurate and reliable content detection tools has grown. Among the various available tools, Decopy AI stands out as a highly effective yet simple solution for identifying AI-generated text. Here’s how Decopy AI compares in the landscape of AI content detectors:

1.User-Friendly Interface

One of Decopy AI’s major advantages is its simple, intuitive interface. Users don’t need technical expertise to use the platform effectively. Whether you’re a student, researcher, or educator, Decopy AI’s easy-to-navigate design allows you to upload or paste your text and receive results in just a few seconds.

2.Free to Use Without Registration

Unlike many AI content detectors that require you to sign up or subscribe to a premium service, Decopy AI is entirely free. You can start using the tool immediately without the hassle of creating an account, making it accessible to a wider range of users, from students to educators and institutions.

3.Comprehensive AI Detection

Decopy AI can detect a range of different content types, including:

● ChatGPT-generated text

● Claude-generated text

● Hybrid (AI + Human) content

● Human-written text

This is especially useful for medical schools checking if you use AI in your application materials. Admissions committees can easily differentiate between work that has been AI-generated and text that genuinely reflects the applicant's personal voice and experiences.

4.Multiple Languages Support

Decopy AI is not limited to just English. It can process and analyze content in multiple languages, which is important for institutions with diverse, international applicants. This broad capability ensures that it can be used by students and institutions globally, whether in the U.S., Europe, or other regions where multiple languages are spoken.

5.Web Page Extension

Decopy AI offers a web page extension that allows you to check content directly on any website. This feature is particularly useful for those who want to quickly analyze text from online sources, such as articles, research papers, or any other publicly available content.

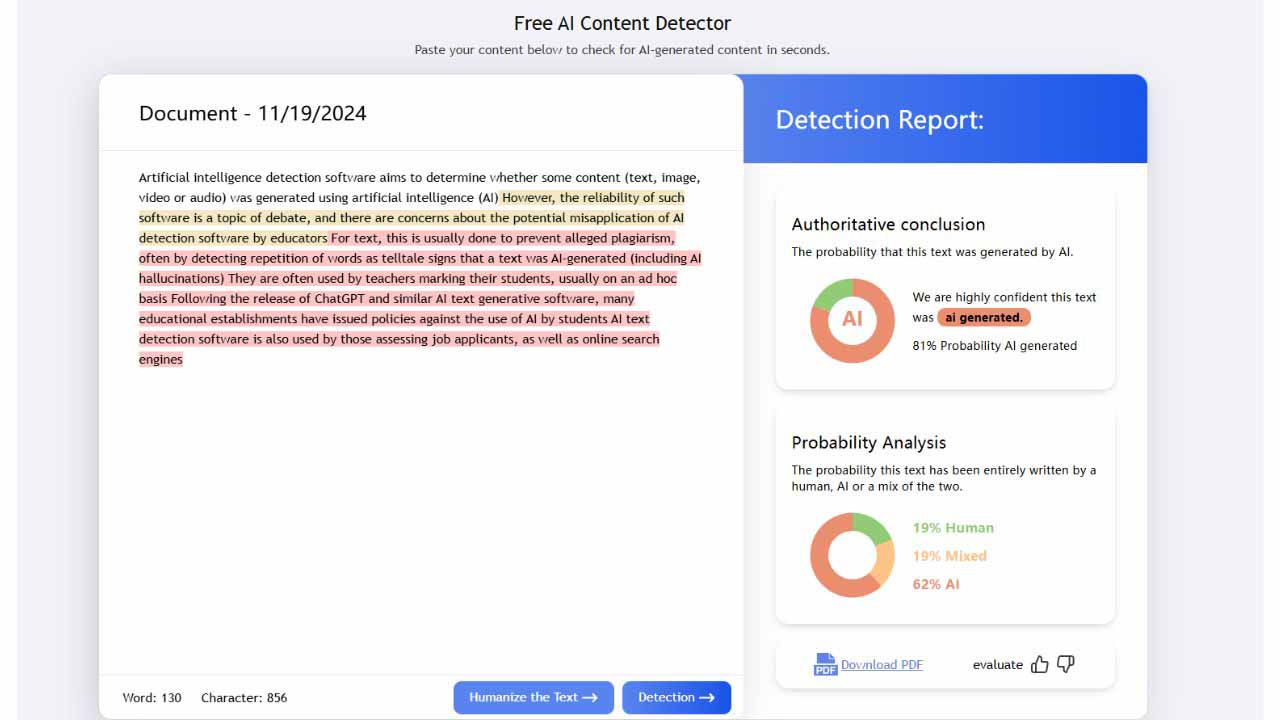

6.Clear, Visual Feedback

Decopy AI uses charts and color-coded feedback to clearly highlight which parts of the text are AI-generated, human-written, or hybrid. This helps users easily interpret the results and take appropriate action. Whether you are preparing your med school application or writing a research paper, Decopy AI’s visual feedback ensures that you can quickly assess the authenticity of your content.

How Decopy AI Supports Medical Schools in AI Content Detection

Decopy AI provides a practical, efficient solution for medical schools to check if applicants have used AI in their application materials. With its advanced detection capabilities, Decopy AI offers institutions the ability to:

● Check AI-generated content:

Decopy AI easily identifies text generated by popular AI models like ChatGPT and Claude, ensuring that applicants are submitting original work.

● Spot hybrid AI+human content:

Some applicants may mix AI-generated text with their own writing. Decopy AI can detect these hybrid pieces and help schools ensure they are evaluating a candidate’s true capabilities.

● Provide transparency:

The charts and visual feedback provided by Decopy AI offer clear insights into the authenticity of submitted content. This ensures that medical schools can make fair and informed decisions about each applicant.

How to Use Decopy AI Content Detector

Using Decopy AI to check your content for AI-generated text is simple and quick. Follow these steps to ensure your writing is original and meets academic standards:

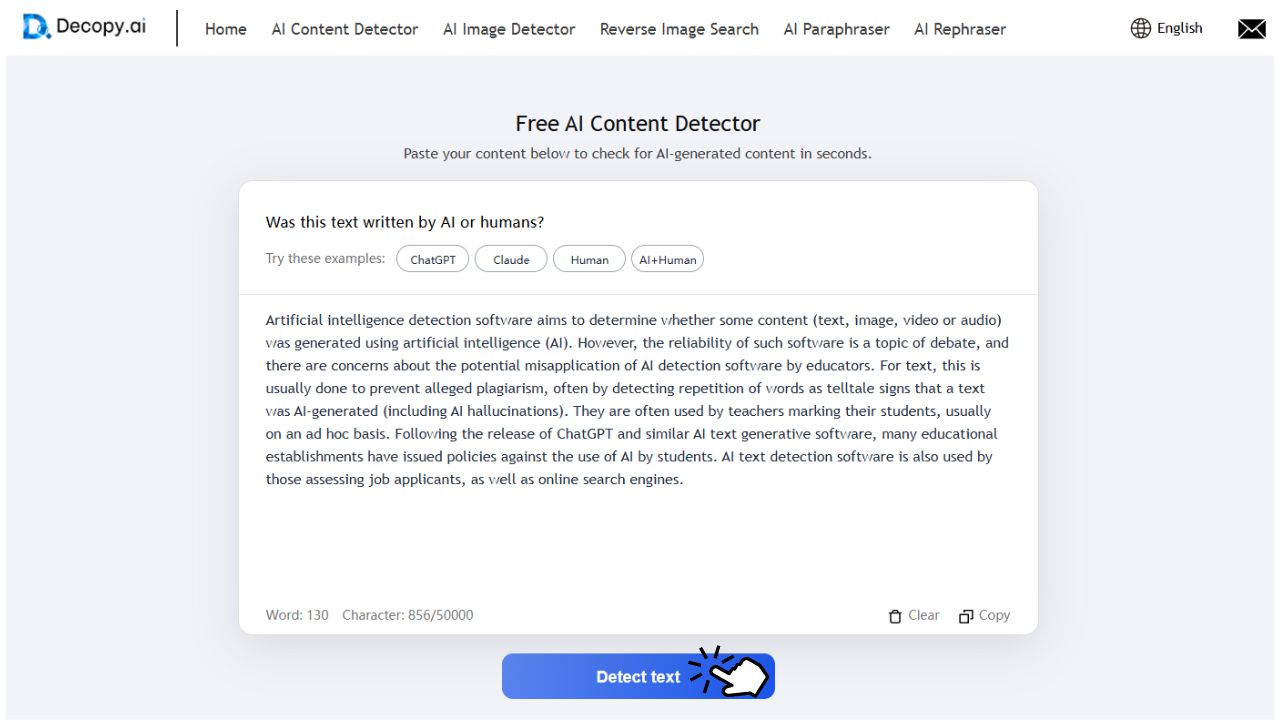

1.Visit the Website

Go to the Decopy AI website from your browser.

2.Select the AI Content Detector

Navigate to the "Detection Tools" section on the website and choose the AI Content Detector from the list of available tools.

3.Paste Your Text

Copy your essay, research paper, or written content, and paste it into the designated text box provided on the tool page.

4.Initiate the Check

After pasting your text, click on the “Detect text” button to start the AI detection process.

5.Review the Report

Once the analysis is complete, review the detailed report.

The Bottom Line

The rise of AI in applications doesn’t just challenge students—it challenges institutions to rethink their approach to fairness, originality, and the future of work. So, are medical schools checking if you use AI? Some are, and others will likely follow.

But here’s the takeaway: AI isn’t going anywhere. For students, the key is using it ethically. For med schools, the focus should be on evaluating character and potential—not just catching clever use of algorithms.

As for Rahul? He got in. And no, he didn’t use AI to write his essay. But he did ask ChatGPT to brainstorm a few ideas—and I’d argue that’s a skill worth celebrating, not penalizing.

Frequently Asked Questions (FAQ)

1.Can schools see if you use AI?

Yes, schools can detect AI-generated content, especially with the growing sophistication of AI detection tools. These tools analyze writing for patterns typical of AI, such as unnatural flow or overly polished sentences. However, the detection isn't always perfect, and it depends on the tools used and the quality of the AI-generated content.

2.Can professors tell if you use AI?

Professors can sometimes tell if AI was used, especially if the writing lacks personal insight or depth. AI-generated content often follows a more formulaic structure and lacks the emotional nuances that come from personal experiences. While it's not always immediately obvious, AI detection tools can check.

3.Do universities check for AI content?

Many universities are beginning to check for AI-generated content as academic integrity becomes more important in the age of AI. The growing concern is that students might rely on AI for essays, assignments, or research papers instead of demonstrating their own analytical and writing skills. Universities are adopting AI content detectors to maintain fairness in assessments.

4.Does AMCAS know if you use ChatGPT?

The AMCAS (American Medical College Application Service) doesn’t explicitly check for AI-generated content, but they do expect authenticity in applications. If you use ChatGPT or other AI tools, there’s a risk that the content may be flagged by automated systems, especially if it lacks a personal touch. Admissions committees often value genuine experiences and personal reflection, which AI may struggle to provide.

5.Can medical schools see if you used AI?

Yes, medical schools, like most higher education institutions, are increasingly aware of the potential for AI-generated content. As AI detection technologies advance, it’s becoming easier for schools to spot content that lacks originality. Medical schools, with their emphasis on ethics and integrity, may adopt AI content detectors to ensure that applicants are submitting authentic work.

6.Can colleges see that you used ChatGPT?

While colleges may not always have dedicated tools to detect ChatGPT usage, the growing trend of AI content detection means that they can identify when content doesn’t match the applicant’s usual writing style or lacks personal experience. The use of AI tools like ChatGPT can leave traces, making it increasingly difficult to pass off AI-generated content as your own.